Hello! In this Tutorial I will explain how to use RoboDK x TwinCAT x Raspberry Pi4 x Coral USB to implement a Machine Learning Application for image Classification.

Let me Introduce myself again. I am an Engineer that comes from Hong Kong and now working in Japan.I do not have a University education Background, and this is also a big reason for me to try harder to learn every day.

After reading this post , I hope you can find some “idea” to integrate the edge device in your Machine and using the edge device is not as hard as we think.

To finish this task, there are so many small goals that I splitted:

- Setup the RoboDK Station

- Setup the Raspberry and Edge Device

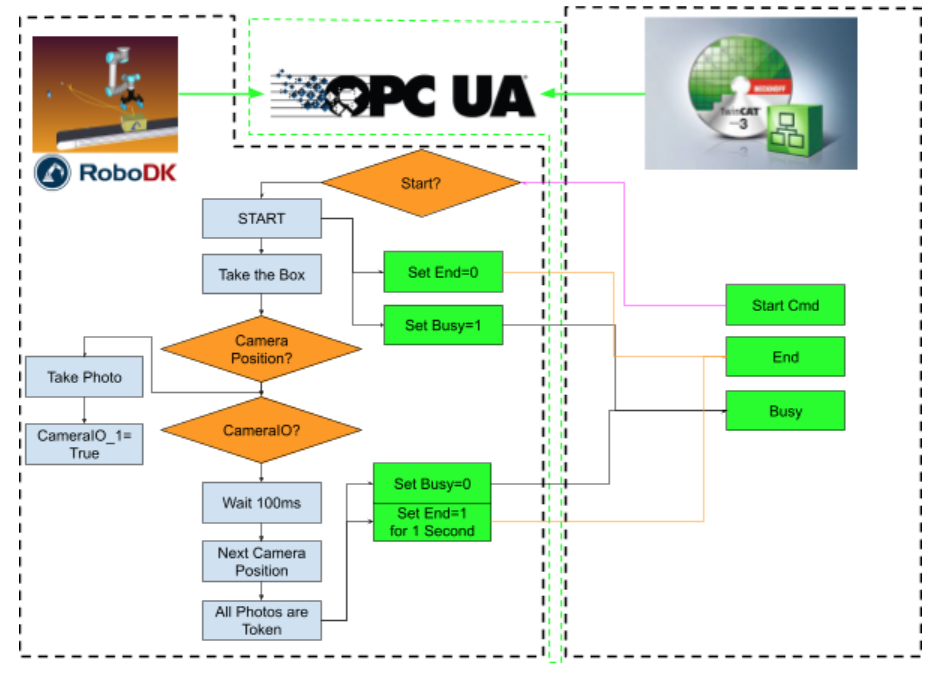

- The Handshake between RoboDK and TwinCAT

- The Handshake between TwinCAT and Raspberry

- Image Transfer from TwinCAT to Raspberry

- Visualization by using TF1800 in TwinCAT

- Know what is Transfer-Learning

- Setup the OPC UA Communication between device

- build an OPC UA Server by TF6100 in TwinCAT

- build an OPC UA Client in RobDK and Raspberry PI

- Read/Write the structure Node

It is very different to write all these topics from 0 to 100, I will provide the post link that I wrote before and please reference it to finish some small task.

Sure you can download the source-code at the end of the post.

Transfer-learning?

This post had a very clear explanation of What transfer-learning is.

Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on a second task.

It is a popular approach in deep learning where pre-trained models are used as the starting point on computer vision and natural language processing tasks given the vast compute and time resources required to develop neural network models on these problems and from the huge jumps in skill that they provide on related problems.

https://machinelearningmastery.com/transfer-learning-for-deep-learning/

In this Tutorial I will use the model from Coral that supports classification of 1000 objects.

Configuration

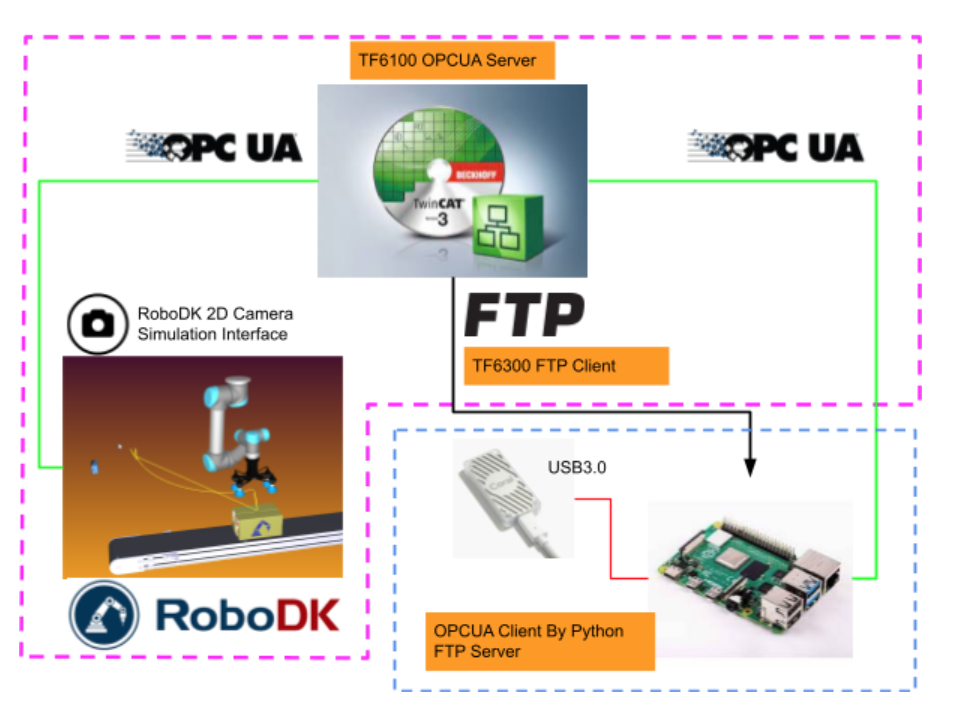

Here is the Configuration of this tutorial. We will configure the TwinCAT as an OPCUA Server by TF6100, And configure the RoboDK and Raspberry as OPC UA Client , and access the Node inside the Server.

While the virtual robot finishes the image snap, TwinCAT will send the images to Raspberry pi with FTP protocols.TwinCAT is FTP Client by using TF6300 and Raspberry is a FTP Server.

Edge Device,Coral USB is plugged into Raspberry pi in USB3.0 to speed up the Image Classification.Finally , the classification results are sent back to TwinCAT with OPCUA, and Display by TF1800.

In this example, TwinCAT and RoboDk are installed on the same PC.

Operation Concept

In this project, we have Manual Mode and Auto Mode.

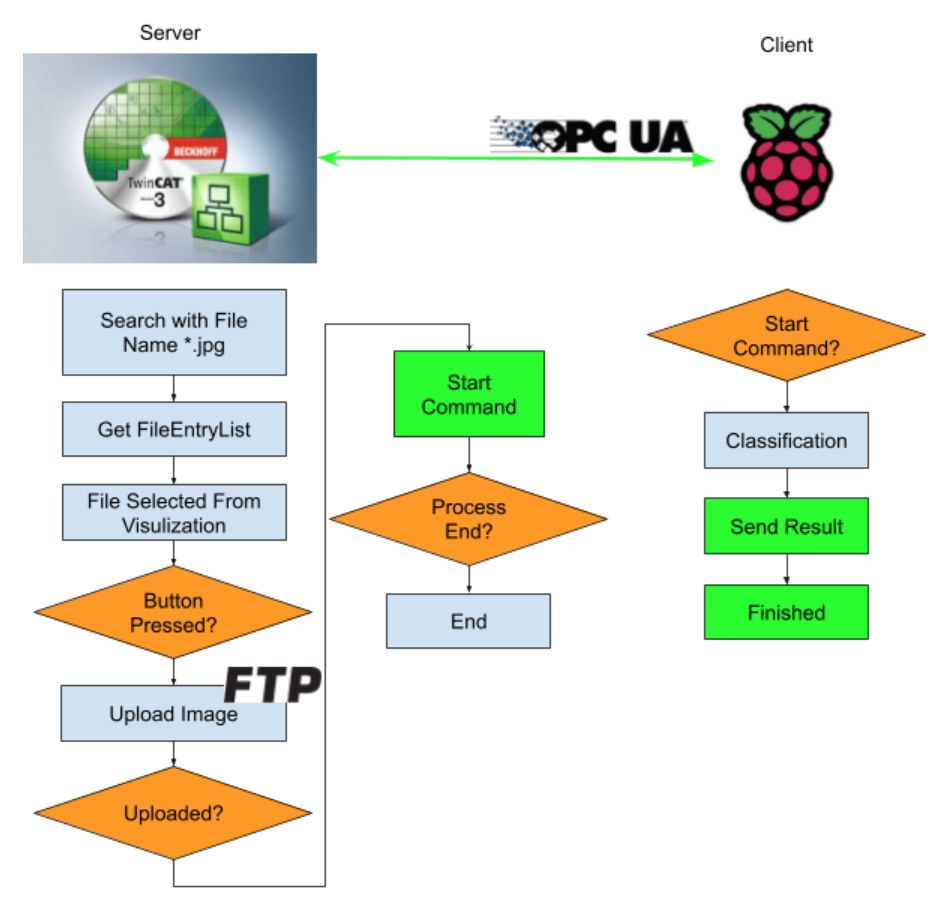

Manual Mode

Here is the Flow of Manual Mode.The operator can select which image that would like to classification, then press the send button to send to the raspberry.

After the image is received and classified , the result will send back to TwinCAT .

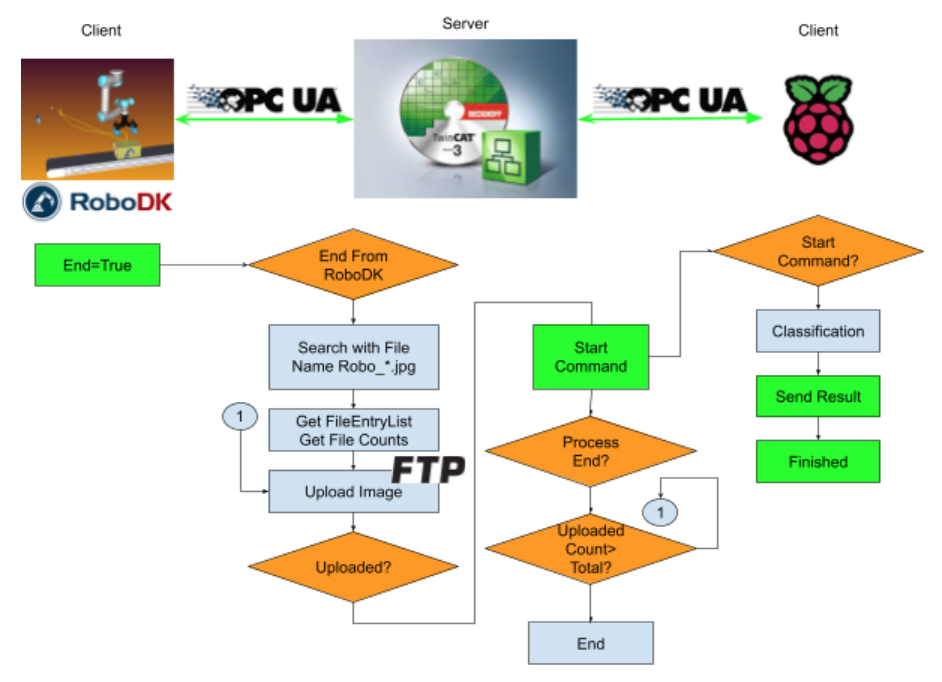

Auto Mode

Auto Mode is very Similar to Manual Mode but just has the handshake part.

TwinCAT will wait for the Program end flag from RoboDK.

If Program End Flag from RoboDK=1, TwinCAT will search all the images that included with name “Robo_*.jpg” and list up.Then TwincAT will transfer the image to raspberry one by one to get the classification result.

TwinCAT Side

There are 3 configurations that you need to set up here. They are TF6300 FTP Client, TF6100 OPC UA Server and the TF1800 Screen.

FTP Client Setup

Please reference the following Link:

OPCUA Server Setup

Please reference the following Link:

Allow to access With Structure

Insert this attribute to all the OPCUA Nodes.

| {attribute ‘OPC.UA.DA.StructuredType’ := ‘1’} |

Visualization

Please reference the following Link, you will know how to search files in the Directory and configure the screen.

Manual Screen

Auto Screen

Code

DUT

DUT_OPCUA_PI4_ClassificationResult

The Data type for the Image Classification Result

| TYPE DUT_OPCUA_PI4_ClassificationResult : STRUCT Label:STRING; Score:REAL; END_STRUCT END_TYPE |

DUT_OPCUA_PI4_TPUConfigurations

It is not being used now but planning for the Classification parameters.

| TYPE DUT_OPCUA_PI4_TPUConfigurations : STRUCT top_k:UINT:=2;//Max number of classification results Count:UINT:=5;//Number of times to run inferences mean:REAL:=128.0; std:REAL:=128.0; threshold:REAL:=0.0; END_STRUCT END_TYPE |

DUT_OPCUA_ReadFromPI4

The OPC UA Node for Raspberry pi4 to write the data.

| TYPE DUT_OPCUA_ReadFromPI4 : STRUCT bClientReady:BOOL; bTPUReady:BOOL; bBusy:BOOL; bFinished:BOOL; arrResult:ARRAY[0..5]OF DUT_OPCUA_PI4_ClassificationResult; END_STRUCT END_TYPE |

DUT_OPCUA_Write2PI4

The OPC UA Node to send the data to Raspberry PI.

| TYPE DUT_OPCUA_Write2PI4 : STRUCT bStart2Classification:BOOL; sImageName :STRING; END_STRUCT END_TYPE |

DUT_OPCUA_ReadFromRoboDK

The OPC UA Node that is sent to RoboDK.

| TYPE DUT_OPCUA_ReadFromRoboDK : STRUCT sImagePath:STRING; bImageisSaved:BOOL; bBusy:BOOL; bEnd:BOOL; END_STRUCT END_TYPE |

DUT_OPCUA_Write2RoboDK

The OPC UA Node that is received from RoboDK.

| TYPE DUT_OPCUA_Write2RoboDK : STRUCT bStartProgram:BOOL; END_STRUCT END_TYPE |

DUT_HMIFileEntry_Display

This is the DUT for HMI Display and Structured screen is used in this Project.

And also the T_FILETYPE is not easy to display in HMI, DT type is used.

| TYPE DUT_HMIFileEntry_Display : STRUCT LastAccesTime,LastModificationTime,CreationTime:DT; FileEntry:ST_FindFileEntry; ImagePath:STRING; END_STRUCT END_TYPE |

eFBStatus

The Enum status for Function Block status.

| {attribute ‘qualified_only’} {attribute ‘strict’} TYPE eFBStatus : ( Idle:=0 ,Busy:=1 ,Error:=2 ); END_TYPE |

GVL

GVL

The variables for HMI Display and signal Exchange between different POU.

| {attribute ‘qualified_only’} VAR_GLOBAL currentPath:STRING; arrResult:ARRAY[0..49,0..5]OF DUT_OPCUA_PI4_ClassificationResult; FindList:ARRAY[0..49]OF ST_FindFileEntry; nFindFiles: UDINT; bUpload:BOOL; OutputIndex:INT; ImagePath:STRING; AutoRun:BOOL; bTrigger:BOOL; hmiAutoDisplay:ARRAY[0..5]OF DUT_OPCUA_PI4_ClassificationResult; bReset:BOOL; Processing:BOOL; Processing2:BOOL; Hide:BOOL; END_VAR |

GVL_OPCUAServer

The OPC Server Node.

| {attribute ‘qualified_only’} VAR_GLOBAL {attribute ‘OPC.UA.DA’ := ‘1’} {attribute ‘OPC.UA.DA.StructuredType’ := ‘1’} ReadFromPI4:DUT_OPCUA_ReadFromPI4; {attribute ‘OPC.UA.DA’ := ‘1’} {attribute ‘OPC.UA.DA.StructuredType’ := ‘1’} WriteToPI4:DUT_OPCUA_Write2PI4; {attribute ‘OPC.UA.DA’ := ‘1’} {attribute ‘OPC.UA.DA.StructuredType’ := ‘1’} ReadFromRoboDK:DUT_OPCUA_ReadFromRoboDK; {attribute ‘OPC.UA.DA’ := ‘1’} {attribute ‘OPC.UA.DA.StructuredType’ := ‘1’} Write2RoboDK:DUT_OPCUA_Write2RoboDK; END_VAR |

FB_FTPClient

Please reference the following Link:

pFileOpeartions

Please reference the following Link:

VAR

| VAR EnumFindFileList:FB_EnumFindFileList; FindList:ARRAY[0..49]OF ST_FindFileEntry; sSearchPath:STRING; bExecute:BOOL; commnd:E_EnumCmdType; bBusy:BOOL; hmiIndex:INT:=0; bError: BOOL; nErrID: UDINT; bEOE: BOOL; nFindFiles: UDINT; hmiDisplayFileEntry:DUT_HMIFileEntry_Display; FileTime:DT; sBasicPath:STRING:=’file:\\\C:\FTPFiles\’; bDisplay:BOOL; hmiIndexDisplayBackup:INT; R_TRIG:R_TRIG; AutoRun: BOOL; F_TRIG:F_TRIG; END_VAR |

Program

| AutoRun:=GVL.AutoRun; IF NOT AutoRun THEN sSearchPath:=’C:\FTPFiles\*.jpg’; ELSE sSearchPath:=’C:\FTPFiles\RoboDK*.jpg’; END_IF R_TRIG(CLK:=GVL_OPCUAServer.ReadFromRoboDK.bEnd); IF R_TRIG.Q THEN bExecute:=TRUE; END_IF EnumFindFileList( sNetId:=” ,sPathName:=sSearchPath ,eCmd:=commnd ,pFindList:=ADR(FindList) ,cbFindList:=SIZEOF(FindList) ,bExecute:=bExecute OR R_TRIG.Q ,bBusy=>bBusy ,bError=>bError ,nErrID=>nErrID ,bEOE=>bEOE ,nFindFiles=>nFindFiles ); IF EnumFindFileList.bExecute AND (EnumFindFileList.bEOE OR EnumFindFileList.bError)THEN bExecute:=FALSE; IF AutoRun THEN GVL.bTrigger:=TRUE; END_IF END_IF IF NOT AutoRun THEN IF hmiIndex >=0 AND hmiIndex <=15 THEN hmiDisplayFileEntry.FileEntry:=FindList[hmiIndex]; END_IF IF hmiIndex <0 THEN hmiIndex:=15; ELSIF hmiIndex >15 OR hmiIndex > EnumFindFileList.nFindFiles-1 THEN hmiIndex:=0; END_IF END_IF; IF AutoRun THEN IF hmiIndex >5 THEN hmiIndex:=0; END_IF IF hmiIndex <0 THEN hmiIndex:=5; END_IF hmiDisplayFileEntry.FileEntry:=GVL.FindList[hmiIndex]; GVL.hmiAutoDisplay[0]:=GVL.arrResult[hmiIndex,0]; GVL.hmiAutoDisplay[1]:=GVL.arrResult[hmiIndex,1]; END_IF F_TRIG(CLK:=GVL.Processing); IF F_TRIG.Q THEN hmiIndex:=99; END_IF IF hmiIndex <>hmiIndexDisplayBackup THEN hmiIndexDisplayBackup:=hmiIndex; bDisplay:=TRUE; END_IF hmiDisplayFileEntry.CreationTime:= FILETIME_TO_DT( fileTime:= hmiDisplayFileEntry.FileEntry.creationTime); hmiDisplayFileEntry.LastAccesTime:= FILETIME_TO_DT( fileTime:= hmiDisplayFileEntry.FileEntry.lastAccessTime); hmiDisplayFileEntry.LastModificationTime:= FILETIME_TO_DT( fileTime:= hmiDisplayFileEntry.FileEntry.lastWriteTime); hmiDisplayFileEntry.ImagePath:=CONCAT(STR1:=sBasicPath,STR2:=hmiDisplayFileEntry.FileEntry.sFileName); GVL.currentPath:=hmiDisplayFileEntry.FileEntry.sFileName; GVL.FindList:=FindList; GVL.nFindFiles:=nFindFiles; |

piHandShakeCheck

The Handshake between RoboDK and Raspberry and TwinCAT.

VAR

| VAR Count:INT; R_TRIG:R_TRIG; TON:TON; iStep:INT; i,j:INT; bTrigger:BOOL; OutIndex:INT; testString:STRING; bRun:BOOL; bClear:BOOL; END_VAR |

Program

| IF GVL_OPCUAServer.Write2RoboDK.bStartProgram AND GVL_OPCUAServer.ReadFromRoboDK.bBusy AND GVL.AutoRun THEN GVL_OPCUAServer.Write2RoboDK.bStartProgram:=FALSE; END_IF IF NOT GVL.AutoRun THEN GVL_OPCUAServer.Write2RoboDK.bStartProgram :=FALSE; END_IF IF GVL.bReset THEN iStep:=0; GVL.bTrigger:=FALSE; GVL.bUpload:=FALSE; GVL.bReset:=FALSE; bRun:=FALSE; OutIndex:=0; END_IF IF GVL.nFindFiles >0 AND GVL.bTrigger AND NOT bRun THEN iStep:=1; bRun:=TRUE; GVL.bTrigger:=FALSE; FOR i:=0 TO 5 DO FOR j:=0 TO 5 DO GVL.arrResult[i,j].Label:=”; GVL.arrResult[i,j].Score:=0.0; END_FOR END_FOR END_IF; IF bClear THEN FOR i:=0 TO 49 DO FOR j:=0 TO 5 DO GVL.arrResult[i,j].Label:=”; GVL.arrResult[i,j].Score:=0.0; END_FOR END_FOR bClear:=FALSE; END_IF CASE iStep OF 1: GVL.OutputIndex:=OutIndex; GVL.ImagePath:=GVL.FindList[OutIndex].sFileName; GVL.bUpload:=TRUE; IF GVL_OPCUAServer.ReadFromPI4.bBusy THEN iStep:=2; END_IF 2: TON(in:=NOT GVL_OPCUAServer.ReadFromPI4.bBusy ,PT:=T#0.3S); IF TON.Q THEN TON(IN:=FALSE); iStep:=3; END_IF GVL.bUpload:=FALSE; 3: GVL.arrResult[OutIndex,0]:=GVL_OPCUAServer.ReadFromPI4.arrResult[0]; GVL.arrResult[OutIndex,1]:=GVL_OPCUAServer.ReadFromPI4.arrResult[1]; OutIndex:=OutIndex+1; iStep:=99; 99: IF OutIndex > GVL.nFindFiles-1 THEN OutIndex:=0; bRun:=FALSE; iStep:=0; ELSE iStep:=1; END_IF END_CASE GVL.Processing:=iStep=0 ; GVL.Processing2:=NOT GVL_OPCUAServer.ReadFromRoboDK.bBusy; GVL.Hide:=NOT GVL.Processing OR GVL_OPCUAServer.ReadFromRoboDK.bBusy; |

Main

The program for FTP Control and send the command to Raspberry.

VAR

| VAR FTPBasicClientEx:FB_FTPClient; bUpload:BOOL; bDownload:BOOL; iStep:INT; hrUpload:DINT; hrDownload:DINT; TON:TON; iFtpStep:INT; F_TRIG_Upload,F_TRIG_Download:F_TRIG; sSendImage:STRING; sBasicSrcPath:STRING:=’C:\FTPFiles\’; sBasicDestPath:STRING:=’/files/’; Count:INT; R_TRIG:R_TRIG; AutoRun:BOOL; END_VAR |

Program

| AutoRun:=GVL.AutoRun; //FTP //Upload Progress4 IF NOT AutoRun THEN FTPBasicClientEx.SrcFilePath:=CONCAT(STR1:=sBasicSrcPath,STR2:=GVL.currentPath); FTPBasicClientEx.DestFilePath:=CONCAT(STR1:=sBasicDestPath,STR2:=GVL.currentPath); ELSE FTPBasicClientEx.SrcFilePath:=CONCAT(STR1:=sBasicSrcPath,STR2:=GVL.ImagePath); FTPBasicClientEx.DestFilePath:=CONCAT(STR1:=sBasicDestPath,STR2:=GVL.ImagePath); END_IF IF AutoRun THEN bUpload:=GVL.bUpload; END_IF IF bUpload AND NOT bDownload AND iFtpStep=0 THEN FTPBasicClientEx.UserName:=’pi’; FTPBasicClientEx.Password:=’raspberry’; FTPBasicClientEx.Host:=’192.168.3.14′; FTPBasicClientEx.Port:=DEFAULT_FTP_PORT; iFtpStep:=1; END_IF //Download Progress IF bDownload AND NOT bUpload AND iFtpStep=0 THEN FTPBasicClientEx.UserName:=’pi’; FTPBasicClientEx.Password:=’raspberry’; FTPBasicClientEx.Host:=’192.168.3.14′; FTPBasicClientEx.Port:=DEFAULT_FTP_PORT; FTPBasicClientEx.SrcFilePath:=’/files/log.txt’; FTPBasicClientEx.DestFilePath:=’ C:\FTPFiles\log.txt’; hrDownload:=FTPBasicClientEx.Download(bDownload); iFtpStep:=2; END_IF //Function Block FTPBasicClientEx(); //Status Control CASE iFtpStep OF 1: hrUpload:=FTPBasicClientEx.Upload(bUpload); F_TRIG_Upload(CLK:= hrUpload = eFBStatus.Busy); IF F_TRIG_Upload.Q THEN iFtpStep:=0; GVL_OPCUAServer.WriteToPI4.bStart2Classification:=TRUE; GVL_OPCUAServer.WriteToPI4.sImageName:=FTPBasicClientEx.DestFilePath; bUpload:=FALSE; hrUpload:=FTPBasicClientEx.Upload(FALSE); END_IF 2: hrUpload:=FTPBasicClientEx.Download(bDownload); F_TRIG_Download(CLK:= hrDownload = eFBStatus.Busy); IF F_TRIG_Download.Q THEN iFtpStep:=0; hrUpload:=FTPBasicClientEx.Download(FALSE); END_IF END_CASE //Flag Reset IF bDownload AND hrDownload >0 THEN bDownload:=FALSE; END_IF TON( IN:=GVL_OPCUAServer.WriteToPI4.bStart2Classification ,PT:=T#0.4S ); IF TON.Q THEN GVL_OPCUAServer.WriteToPI4.bStart2Classification:=FALSE; END_IF // pFileOpeartions(); piHandShakeCheck(); |

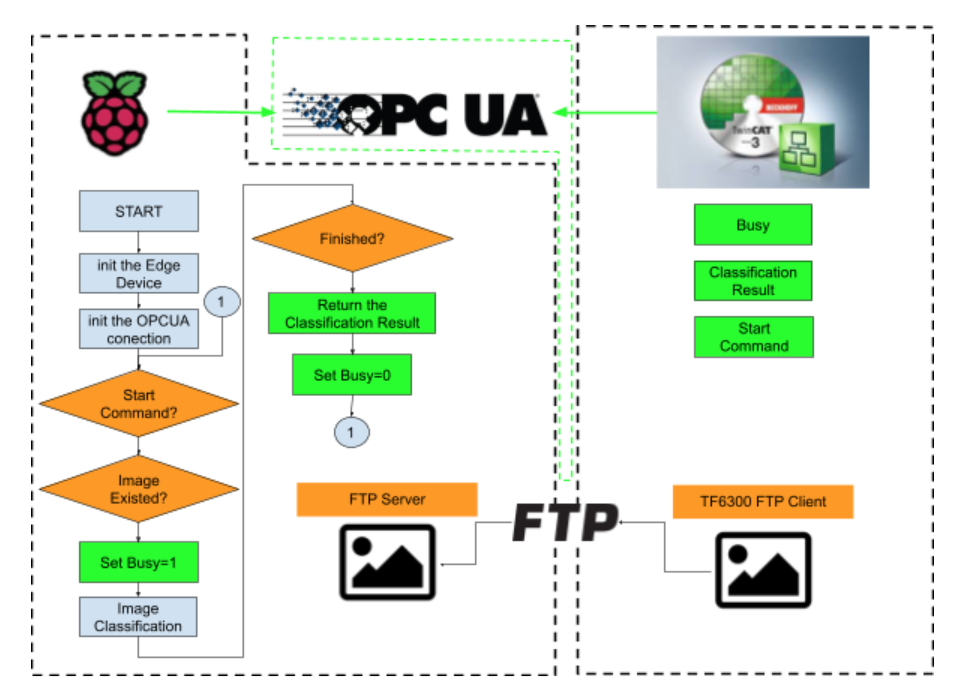

Raspberry PI4 Side

Please reference the following Link to easily configure your Raspberry.

FTP Sever Setup

Please reference the following Link:

Coral USB Setup

Please reference the following Link:

Model

This model is used in this time to classificate 1000 objects.

https://coral.ai/models/image-classification/

OPCUA Client Setup

We will use python-opcua in this tutorial.

https://github.com/FreeOpcUa/python-opcua

Install

| pip install opcua |

File Configuration

Code

| import numpy as np import time from PIL import Image from pycoral.adapters import classify from pycoral.adapters import common from pycoral.utils.dataset import read_label_file from pycoral.utils.edgetpu import make_interpreter from opcua import Client from opcua import ua import os.path import datetime #OPCUA Setup ENDPOINT=”opc.tcp://192.168.3.124:4840″ client=Client(ENDPOINT) NodeID2Server=’ns=4;s=GVL_OPCUAServer.ReadFromPI4′ NodeIDFromServer=’ns=4;s=GVL_OPCUAServer.WriteToPI4′ BASEPATH=’/home/pi/ftp’ #TPU Setup LABELPATH=’imagenet_labels.txt’ MODELPATH=’mobilenet_v1_0.25_128_quant_edgetpu.tflite’ top_k=2 threshold=0.0 count=2 input_mean=128 input_std=128 DEBUG=False def getCurrentTime(): return str(datetime.datetime.now()) def writetext2File(message): with open(‘/home/pi/ftp/files/log.txt’, ‘a’) as log: msg=getCurrentTime()+’:’+message+’\n’ print(msg) log.write(msg) def main(): try: client=Client(ENDPOINT) writetext2File(‘Connecting to OPCUA Server in EndPoint:’+ENDPOINT) client.connect() writetext2File(‘Connecting to OPCUA Server in EndPoint:’+ENDPOINT+’..OK!’) Node2Server=client.get_node(NodeID2Server) NodeFromServer=client.get_node(NodeIDFromServer) client.load_type_definitions() Node2ServerValue=Node2Server.get_value() NodeFromServerValue=NodeFromServer.get_value() Node2ServerValue.bTPUReady=False ReadyCount=0 CommandReceived=False Step=0 #TPU Init #Load the Label labels = read_label_file(LABELPATH) if LABELPATH else {} writetext2File(‘Label is Loaded.’+LABELPATH) #Load the Model interpreter = make_interpreter(*MODELPATH.split(‘@’)) interpreter.allocate_tensors() writetext2File(‘Model is Loaded.’+MODELPATH) # Model must be uint8 quantized if common.input_details(interpreter, ‘dtype’) != np.uint8: raise ValueError(‘Only support uint8 input type.’) ImageFiles=None while True: NodeFromServerValue=NodeFromServer.get_value() ReadyCount=ReadyCount+1 Node2ServerValue.bTPUReady=True Node2ServerValue.bClientReady=True if ReadyCount<=200: Node2ServerValue.bClientReady=False else: Node2ServerValue.bClientReady=True ReadyCount=ReadyCount+1 if ReadyCount >=400: ReadyCount=0 if NodeFromServerValue.bStart2Classification and not CommandReceived: #Command from OPCUA Server writetext2File(‘Command is recevied..’) print(‘Command is received..’) CommandReceived=True writetext2File(‘Path is :’+BASEPATH+NodeFromServerValue.sImageName) print(“path with:”+BASEPATH+NodeFromServerValue.sImageName) #Node2Server.set_value(ua.DataValue(Node2ServerValue)) ImageFiles=os.path.isfile(BASEPATH+NodeFromServerValue.sImageName) print(‘—-‘) if CommandReceived and ImageFiles : Node2ServerValue.bBusy=True #Node2Server.set_value(ua.DataValue(Node2ServerValue)) print(‘Image is found..’) writetext2File(‘Path is found.’) if Step == 2: i=0 # Run inference writetext2File(‘Inferenice with time.,The first inference on Edge TPU is slow because it includes loading the model into Edge TPU memory.’) for _ in range(count): start = time.perf_counter() interpreter.invoke() inference_time = time.perf_counter() – start classes = classify.get_classes(interpreter, top_k, threshold) writetext2File(‘%.1fms’ % (inference_time * 1000)) for c in classes: writetext2File(‘%s: %.5f’ % (labels.get(c.id, c.id), c.score)) Node2ServerValue.arrResult[i].Label=labels.get(c.id, c.id) Node2ServerValue.arrResult[i].Score=c.score i=i+1 Step=99 if Step == 1: params = common.input_details(interpreter, ‘quantization_parameters’) scale = params[‘scales’] zero_point = params[‘zero_points’] mean = input_mean std = input_std writetext2File(‘Step is 1,Initing the Parameters.’) if abs(scale * std – 1) < 1e-5 and abs(mean – zero_point) < 1e-5: # Input data does not require preprocessing. common.set_input(interpreter, image) else: # Input data requires preprocessing normalized_input = (np.asarray(image) – mean) / (std * scale) + zero_point np.clip(normalized_input, 0, 255, out=normalized_input) common.set_input(interpreter, normalized_input.astype(np.uint8)) Step=2 if Step == 0: size = common.input_size(interpreter) s=BASEPATH+NodeFromServerValue.sImageName image = Image.open(s).convert(‘RGB’).resize(size, Image.ANTIALIAS) Step=1 writetext2File(‘Step is 0,Got the image from TwinCAT and Open it.’) for r in Node2ServerValue.arrResult: r.Label=” r.Score=0.0 #not NodeFromServerValue.bStart2Classification and if Step > 0 and Step <99: Node2ServerValue.bBusy=True print(‘–t’) else: CommandReceived=False Node2ServerValue.bBusy=False Step=0 ImageFiles=None Node2Server.set_value(ua.DataValue(Node2ServerValue)) finally: client.disconnect() writetext2File(‘Disconnected from OPCUA Sever.’) if __name__ == ‘__main__’: writetext2File(‘Script is started.’) main() |

RoboDK

The role of RoboDk in this project is only while in Auto Mode.RoboDK is not necessary in here but thanks for their user-friendly interface,python api and 2D Camera Simulation interface, I will test more operation between Robot ,TwinCAT,Camera and be closely to the real environment.

StationSetup

The station is operated to take the box and move to the couples Snap shot position and snap it , saved to the file system.

2D Camera Simulation Interface

Please reference the following Link:

OPCUA Client Setup

I am using the same library as Raspberry.

https://github.com/FreeOpcUa/python-opcua

Please reference the following Link:

Install

| pip install opcua |

Code

| from robodk import robolink # RoboDK API from robodk import robomath # Robot toolbox import datetime import time from opcua import Client,ua import random RDK = robolink.Robolink() UR=RDK.Item(“UR5”) CameraPosition1=RDK.Item(‘tCamera1’) CameraPosition2=RDK.Item(‘tCamera2’) CameraPosition3=RDK.Item(‘tCamera3’) CameraPosition4=RDK.Item(‘tCamera4’) CameraPosition5=RDK.Item(‘tCamera5’) CameraPosition6=RDK.Item(‘tCamera6’) Prog=RDK.Item(‘pMain’) ENDPOINT=’opc.tcp://127.0.0.1:4840′ NODEID2Server=’ns=4;s=GVL_OPCUAServer.ReadFromRoboDK’ NODEIDFromServer=’ns=4;s=GVL_OPCUAServer.Write2RoboDK’ client=Client(ENDPOINT) client.connect() cam_item = RDK.Item(“Camera1”) if not cam_item.Valid(): print(‘No camera..’) quit() snapshot_file=’C:\FTPFiles\p1.jpg’ #Make Dir #ret=RDK.Cam2D_Snapshot(snapshot_file,cam_item) def createImagePath(s): return “C:\FTPFiles\RoboDK”+getCurrentTime()+”.jpg” def getCurrentTime(): return time.strftime(‘%Y_%m_%d_%H_%M_%S_’+str(random.randint(0,99))) def Sanp(Path,Cam): return RDK.Cam2D_Snapshot(Path,Cam) flag=[False,False,False,False,False,False,] client.load_type_definitions() Node2Server=client.get_node(NODEID2Server) NodeFromServer=client.get_node(NODEIDFromServer) NodeFromServerValue=NodeFromServer.get_value() Node2ServerValue=Node2Server.get_value() counts=0 start=0 end=0 _busy=False while True: NodeFromServerValue=NodeFromServer.get_value() Node2ServerValue=Node2Server.get_value() Pose=UR.Pose() if not _busy and NodeFromServerValue.bStartProgram : _busy=True value = Prog.RunProgram() if Prog.Busy(): Node2ServerValue.bBusy=True if Pose == CameraPosition1.Pose() and not flag[0]: ret=Sanp(createImagePath(“001”),cam_item) if ret : UR.setDO(‘CamIO_1’,1) flag[0]=True elif Pose == CameraPosition2.Pose()and not flag[1]: ret=Sanp(createImagePath(“002”),cam_item) if ret: UR.setDO(‘CamIO_2’,1) flag[1]=True elif Pose == CameraPosition3.Pose()and not flag[2]: ret=Sanp(createImagePath(“003”),cam_item) if ret: UR.setDO(‘CamIO_3’,1) flag[2]=True elif Pose == CameraPosition4.Pose()and not flag[3]: ret=Sanp(createImagePath(“004”),cam_item) if ret: UR.setDO(‘CamIO_4’,1) flag[3]=True elif Pose == CameraPosition5.Pose()and not flag[4]: ret=Sanp(createImagePath(“005”),cam_item) if ret: UR.setDO(‘CamIO_5’,1) flag[4]=True elif Pose == CameraPosition6.Pose()and not flag[5]: ret=Sanp(createImagePath(“006”),cam_item) if ret: UR.setDO(‘CamIO_6’,1) flag[5]=True if flag[0] and flag[1] and flag[2] and flag[3] and flag[4] and flag[5]: Node2ServerValue.bEnd=True start=time.time() else: Node2ServerValue.bBusy=False for i in range(0,6): flag[i]=False if Node2ServerValue.bEnd: if time.time()-start > 2: Node2ServerValue.bEnd=False _busy=False Node2Server.set_value(ua.DataValue(Node2ServerValue)) |

Result-Manual

Result-Auto

Source Code

https://github.com/soup01Threes/TwinCAT3/blob/main/DemoProject_TwinCATxRoboDKxRaspberryxML.zip

Finally

In Conclusion, There is an issue that I am facing now and still finding the solutions.

Image Transfer from TwinCAT to Raspberry time is too long.If there is some other method to share files between PC and Raspberry, would you please inform me?

My first plan is using TwinCAT TF3800(Machine Learning Function).Although the conversion between tfile to onnx is successful, I can not convert this onnx model to Beckhoff XML format by using these tools. The error shows something like “Format not supported”(actually I forgot it..).After couple of times of try and error, I choose the configuration in this tutorial – using Edge Devices.

If the conversion between onnx to Beckhoff XML format, the next challenges would be – How to pass the 128x128x images to the TwinCAT and integrated with Tc3_Vision.

And Also – It may be TF3800 does not support Tensorflow Lite currently.

The reason that I am using TwinCAT – They support so many different drivers without additional hardware.we change to ModbusTCP/EIP or any other protocols depending on the applications.

When I was trying to use the Coral TPU in my Windows PC with TwinCAT Runtime, the installation could not be finished because of some errors. But I can install it with my Raspberry Pi4 in 5 mins without any problem.That is the reason why I use raspberry pi.

In this Post Coral USB is used,But you can also choose other edge devices:)

RobDK is installed in the PC with TwinCAT, but you can also install it in the RaspberryPI.

But for the image processing reason, integrating into RoboDK may be better.You do not need to use FTP to send the image anymore.

Also RoboDK is not a must have option here but Thanks for their user-friendly interface and python api, I can implement my station in just 10 mins.

The important thing that i know while i played with this project – you need to split the big goal to so many small goal and clear it one by one.While you make sandwich,you will wash and cut the vegetables, cut the hams and bread , make the egg salad and then finally you will combine it to Sandwich.

And Also, So many people(me) although they know how to wash and cut the vegetables, cut the hams and bread, It is very different to have a mind breaking – Hey! I can combine these to make a sandwich!! – connect all the dots that you have now, and make a new picture.

Finally, I am using the model to make the image classification. There are so many targets for me in the future – Object Detection,Pose estimation, Semantic segmentation,etc.. but also training my own model, tuning.

I hope all of you will like this tutorial. Thanks.